Is it possible to save scans from the ScanSnap ix1600 either to a cloud service or to a NAS, and then have DevonThink import them automatically when the application starts? With the aim of being able to scan even when my Mac is not running?

Yes, assuming, that is, that the scanner is happy to operate stand-alone, i.e. with the Mac off. Simply set DT up to index the appropriate folder and a smart rule to move anything in that indexed group to the global inbox.

As per the link, I set up a folder as my DT import folder; actually an alias to the Global Inbox

I would direct the scans to this folder

Hi Blanc,

Thank you very much for your answer.

I found out that although the ScanSnap cannot save directly to NAS without running the ScanSnap Home software (and therefore the Mac), but it can save to Google Drive. And my Synology NAS can then in turn sync with Google Drive, which ultimately puts the scanned data on the NAS.

As far as I know, DevonThink cannot import directly from GoogleDrive. However, I assume that indexing the NAS folder with DevonThink should not be a problem.

Is setting up the synchronisation per se and the SmartRule to move everything to the Global Inbox a difficult thing?

Is setting up the synchronisation per se and the SmartRule to move everything to the Global Inbox a difficult thing?

What synchronization?

It sounds like you have too many stepping stones in your process.

Where can the ScanSnap save to in Google Drive, i.e, what specific location?

Hi Bluefrog,

It should have written “indexing”, not “synchronization”. Pls apologize the confusion. As per Blanc: “Simply set DT up to index the appropriate folder and a smart rule to move anything in that indexed group to the global inbox.”

The process of routing the scanned document from ScanSnap to DevonThink without the Mac running all the time may sound a little complicated, but in fact I am not aware of a simpler solution. DevonThink, as far as I know, cannot import from a cloud drive (e.g. Google Drive), but it can import from a NAS, and ScanSnap cannot export directly to a NAS, but can export to Google Drive. Hence the bypass with Google Drive. The folder in Google Drive that stores the scans can be freely created and then selected in ScanSnap (whole process works reliably).

Provided the process works as described by Blanc (indexing, importing), the whole process could be used for yet another application, namely to serve multiple users/DT databases. While the regular way (export to DevonThink application in the ScanSnap profile) does not allow any distinction of users, with indexing each database could access a specific storage folder and thus import exactly the corresponding scans. Of course, this would require setting up 2 cloud profiles in ScanSnap with respective specific Google Drives.

I’ve tried to use a smart rule before to accomplish this, but ended up with duplicates of indexed files and the imported version.

Say one starts off with file A, B and C in macOS Finder folder Z:

(Z): A B C

Then an index of folder Z will result in DEVONThink group DTX

(DTX): A B C

If a certain smart rule imports A, B and C, you’ll end up with those files in DT group DTY

(DTY): A B C

When searching for files in DT, I always ended up with duplicate results from both DTX and DTY.

That shouldn’t be the case if you use the Move into Database action.

Why should DEVONthink not be able to index a Google Drive Folder? If it’s integrated in the Finder like an iCloud Drive folder or a Dropbox folder it can be indexed. And then a simple Smart Rule could import the scans into a database.

It would require me to let the Google Drive app run on my Mac, which I do not prefer to do. But if you prefer to do so, that would work.

Thanks for the clarification.

You are correct - you can’t Index an online-only repository.

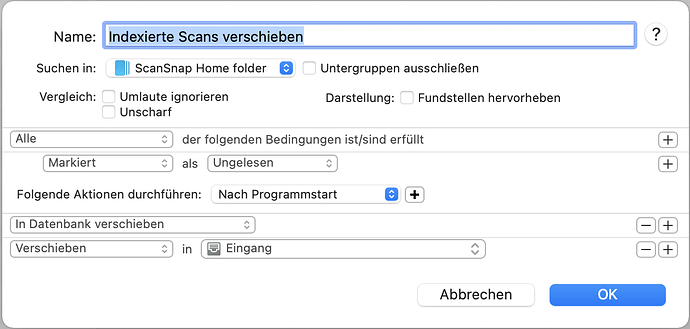

I have tried to implement the process you described. The indexing works perfectly, but the SmartRule for moving does not yet work. I’ve played around a bit, but I don’t understand what’s wrong yet. Below is the smart rule for moving from the indexed folder to the global inbox:

Look in: “indexed folder”

“All” conditions are met:

Conduct the following actions: “After programme start”

“Move to database”

“Move” to “Global Inbox”

What is not correct?

- What location are you targeting with the smart rule?

- Are you only using After programme start ?

-

What location are you targeting with the smart rule?

The idea is that scans are indexed in the ScanSnap Home folder (the “indexed folder”). Then, following Blancs instructions, they should be moved to the Global Inbox (I assume this is what you refer to as target location). From there on, other smart rules should sort the scans into subfolders, based on keywords (realized with other smart rules). -

Are you only using After programme start ?

What I described is basically how the dialog box looks like with the settings which I have done. I am not sure if “after programme start” is the best parameter.

One additional question which came up: What is the proper location for the indexed folder? The application creates it within the inbox, but I noticed that it does not update properly, after moving it to the database it worked fine. Should it be within the database?

As noted in the documentation, an indexed gorup may not update automatically when indexing a cloud-synced location.

There is no proper location for an indexed folder, except one that makes sense in your situation.

As a general rule, the Global Inbox is a good place as it’s made for not-yet-filed or transient data.

Can you post a screen capture of your smart rule?

Hi Bluefrog,

Thank you very much for your answer. To answer in order:

- the synced folder is not in the cloud, but locally on my iMac SSD. I have also emptied the trash, as I had seen in an earlier reply from you.

- I also thought that the Global Inbox would be the right place (since the folder is created there by default). However, the synchronisation doesn’t work there, and it works when placed in the database. I conclude from your answer that this should not be the case. I suggest we ignore this for the moment.

I have now been able to get the whole process to work by changing the condition of the rule to “Marked” as “Unread”. Below is the SmartRule as it is at the moment:

Now I assume that there might still be potential for optimisation. For my understanding I still have 3 questions:

- is it right to take “Marked” as “Unread” as a condition for the rule?

- is there a better time for the execution of the rule than “after programme start”, or is this correct?

- why is the step “Move to database” mentioned by Blanc necessary, or is this implemented correctly in my SmartRule?

Thanks again for all your answers.

You’re welcome!

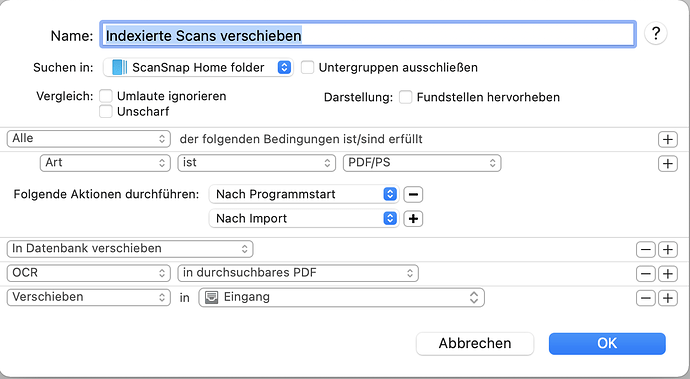

- Using the unread status is fine but you could also just use Date Added is This Hour and Kind is PDF/PS.

- If you want the process to trigger while you’re working, you can use event triggers like On Import or a time-based one like Hourly. You can even set more than one event trigger so you can use the On Startup trigger as well as a time-based one.

- The Move into Database moves the file into the database, removing it from the indexed folder in the Finder. This helps keep the Finder folder empty and waiting for further scan files to be received.

Thank you very much for the clarifications. Everything works.

I made another change in the SmartRule because I also want to run OCR through DevonThink (and not through the ScanSnap). The SmartRule now looks like this (and works):

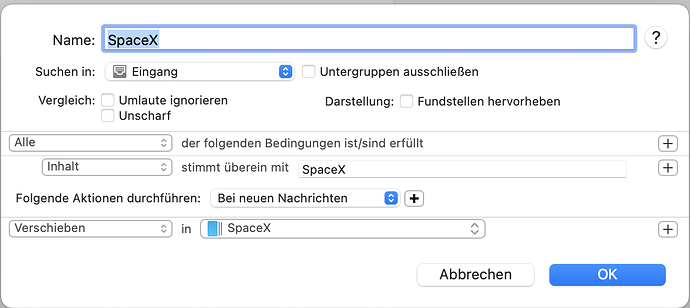

However, what no longer works after implementing this additional OCR step:

After the document had been moved to the Global Inbox, the keyword-based sorting into subfolders was previously triggered. Now I have to trigger this smart rule manually. I have unsuccessfully tried various triggers such as “After import”, “On new messages”, “After creation”, “After move”. The rule currently looks like this:

Do you have any ideas what the problem could be?

If you can wait until I’ve finished a bowl of noodles, I’ll post the answer for you

Now then…: basically, the trigger has already been used up for the record by the first rule. The simplest solution to the problem is to add the following script as the last step of your first rule:

on performSmartRule(theRecords)

tell application id "DNtp"

repeat with theRecord in theRecords

perform smart rule trigger import event record theRecord

end repeat

end tell

end performSmartRule

With that, the on import trigger will fire for each of the records handled by the first rule.